2023 年每個軟體開發者都必須知道的關於 Unicode 統一碼的基本知識

The Absolute Minimum Every Software Developer Must Know About Unicode in 2023 (Still No Excuses!)

作者:Nikita (@tonsky)

Twenty years ago, Joel Spolsky wrote:

二十年前,Joel Spolsky 寫道1:

There Ain’t No Such Thing As Plain Text.

It does not make sense to have a string without knowing what encoding it uses. You can no longer stick your head in the sand and pretend that “plain” text is ASCII.

沒有所謂的純文字。

不知道編碼的字串是沒有意義的。你不能像鴕鳥一樣再把頭埋在沙子裡,假裝「純」文字是 ASCII。

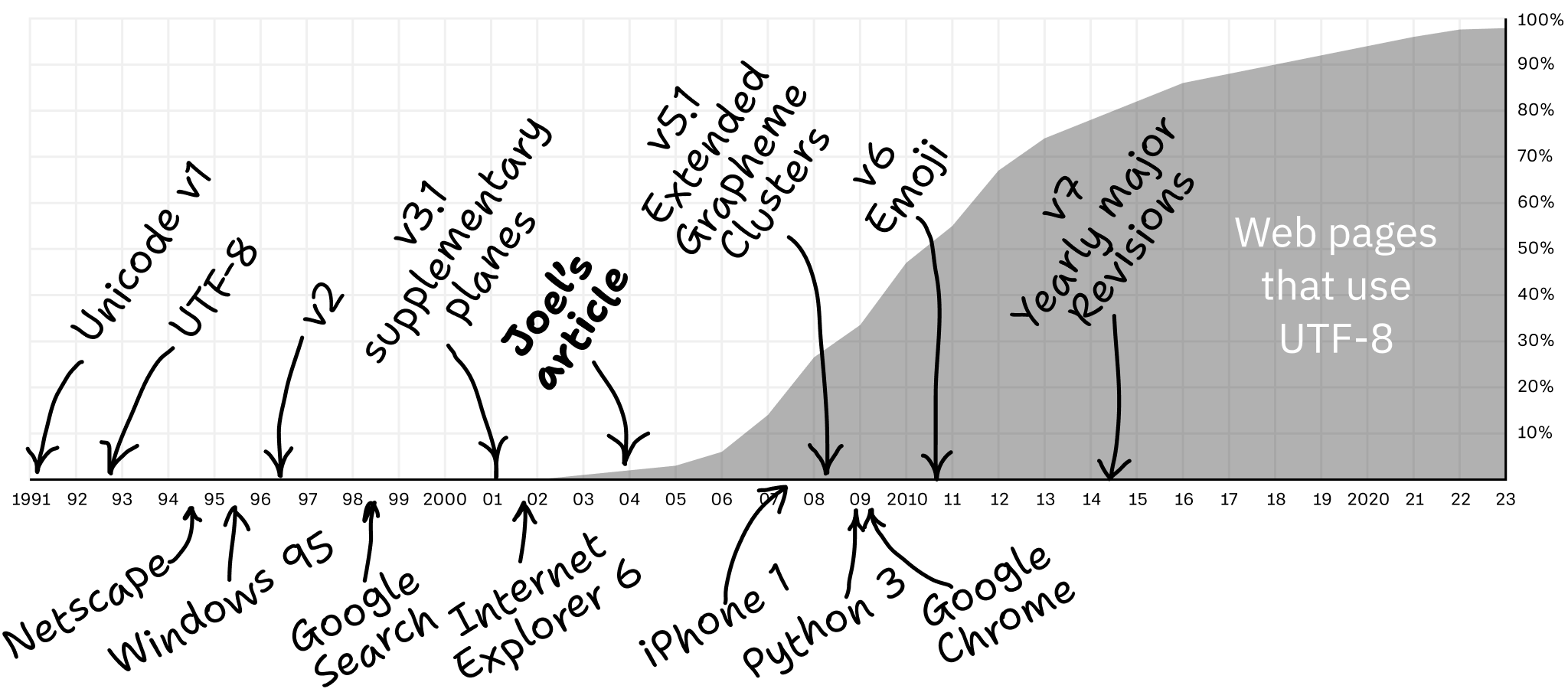

A lot has changed in 20 years. In 2003, the main question was: what encoding is this?

20 年過去了,很多事情都變了。2003 年的時候,主要的問題是:文字用的是什麼編碼的?

In 2023, it’s no longer a question: with a 98% probability, it’s UTF-8. Finally! We can stick our heads in the sand again!

到了 2023 年,這不再是一個問題:有 98% 的機率是 UTF-8。終於!我們可以再次把頭埋在沙子裡了!

The question now becomes: how do we use UTF-8 correctly? Let’s see!

現在的問題是:我們如何正確地使用 UTF-8?讓我們來看看!

What is Unicode?

什麼是 Unicode統一碼?

Unicode is a standard that aims to unify all human languages, both past and present, and make them work with computers.

Unicode 統一碼是一種旨在統一過去和現在的所有人類語言,使其能夠在計算機上使用的標準。

In practice, Unicode is a table that assigns unique numbers to different characters.

在實踐中,Unicode 統一碼是一個將不同字元分配給唯一編號的表格。

For example:

例如:

- The Latin letter

Ais assigned the number65. - 拉丁字母

A被分配了數字65。 - The Arabic Letter Seen

سis1587. - 阿拉伯字母 Seen

س是1587。 - The Katakana Letter Tu

ツis12484 - 片假名字母 Tu

ツ是12484 - The Musical Symbol G Clef

𝄞is119070. - 音樂記號中的高音譜號(G 譜號)

𝄞是119070。 128169.128169。

Unicode refers to these numbers as code points.

Unicode 統一碼將這些數字稱為碼位(code points)。

Since everybody in the world agrees on which numbers correspond to which characters, and we all agree to use Unicode, we can read each other’s texts.

由於世界上的每個人都同意哪些數字對應哪些字元,並且我們都同意使用 Unicode統一碼,我們就可以閱讀彼此的文字。

Unicode == character ⟷ code point.

Unicode 統一碼== 字元 ⟷ 碼位。

How big is Unicode?

Unicode 統一碼有多大?

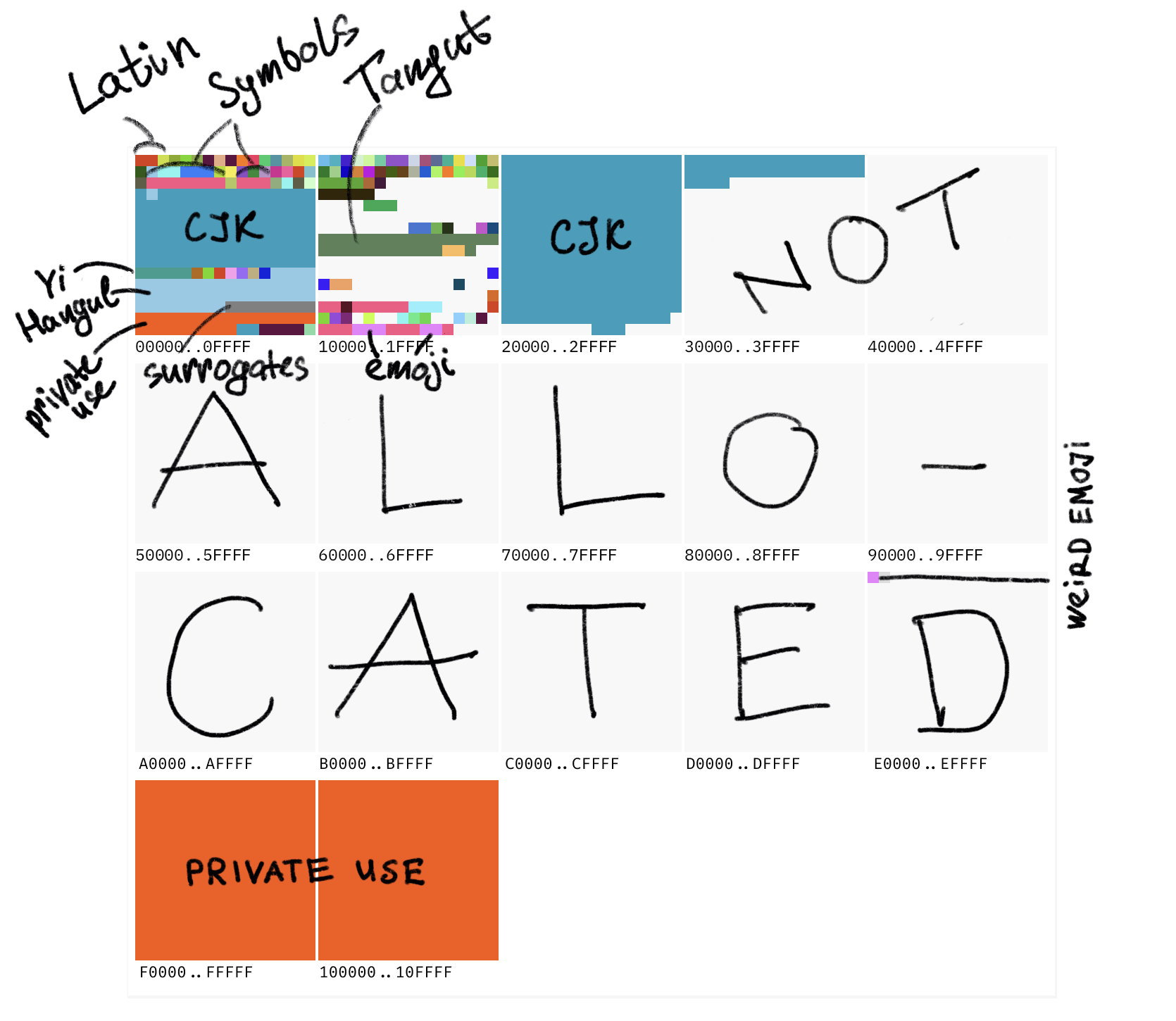

Currently, the largest defined code point is 0x10FFFF. That gives us a space of about 1.1 million code points.

目前,已被定義的最大碼位是 0x10FFFF。這給了我們大約 110 萬個碼位的空間。

About 170,000, or 15%, are currently defined. An additional 11% are reserved for private use. The rest, about 800,000 code points, are not allocated at the moment. They could become characters in the future.

目前已定義了大約 17 萬個碼位,佔 15%。另外 11% 用於私有使用。其餘的大約 80 萬個碼位目前沒有分配。它們可能在未來變成字元。

Here’s roughly how it looks:

這裡是大致的樣子:

Large square == plane == 65,536 characters. Small one == 256 characters. The entire ASCII is half of a small red square in the top left corner.

大方框 == 平面 == 65,536 個字元。小方框 == 256 個字元。整個 ASCII 是左上角小紅色方塊的一半。

What’s Private Use?

什麼是私用區?

These are code points reserved for app developers and will never be defined by Unicode itself.

這些碼位是為應用程式開發人員保留的,Unicode 統一碼自己永遠不會定義它們。

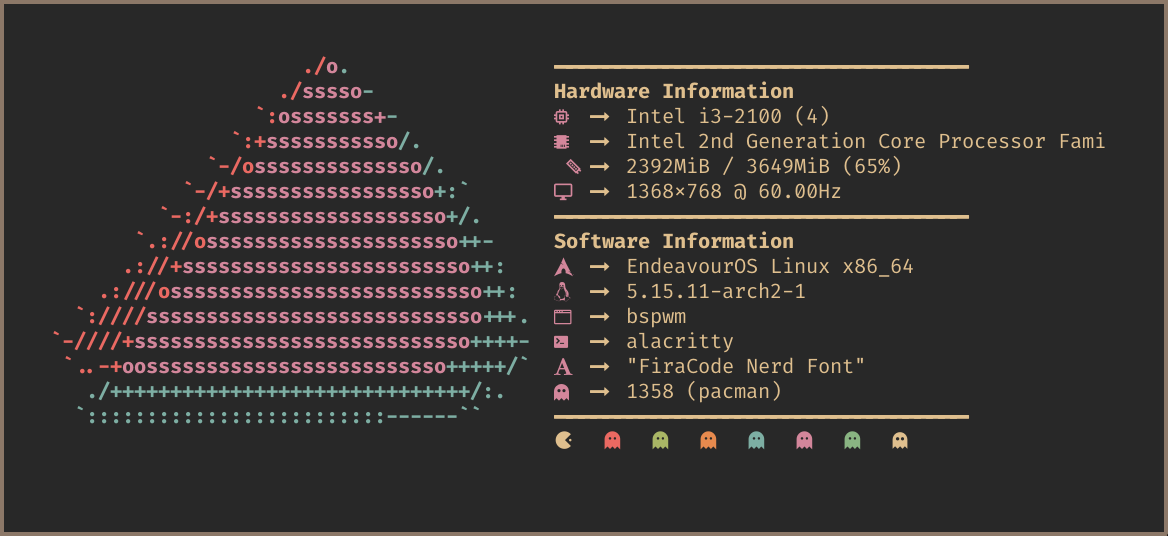

For example, there’s no place for the Apple logo in Unicode, so Apple puts it at U+F8FF which is within the Private Use block. In any other font, it’ll render as missing glyph , but in fonts that ship with macOS, you’ll see .

例如,Unicode 統一碼中沒有蘋果 logo 的位置,因此 Apple 將其放在私用區塊中的 U+F8FF。在任何其他字型中,它都將呈現為缺失的字形 ,但在 macOS 附帶的字型中,你就可以看到 。

The Private Use Area is mostly used by icon fonts:

私用區主要由圖示字型使用:

What does U+1F4A9 mean?

U+1F4A9 是什麼意思?

It’s a convention for how to write code point values. The prefix U+ means, well, Unicode, and 1F4A9 is a code point number in hexadecimal.

這是一種碼位值寫法的約定。字首 U+ 表示 Unicode統一碼,1F4A9 是十六進位制中的碼位數字。

Oh, and U+1F4A9 specifically is

噢,U+1F4A9 具體是

What’s UTF-8 then?

那 UTF-8 是什麼?

UTF-8 is an encoding. Encoding is how we store code points in memory.

UTF-8 是一種編碼。編碼是我們在記憶體中儲存碼位的方式。

The simplest possible encoding for Unicode is UTF-32. It simply stores code points as 32-bit integers. So U+1F4A9 becomes 00 01 F4 A9, taking up four bytes. Any other code point in UTF-32 will also occupy four bytes. Since the highest defined code point is U+10FFFF, any code point is guaranteed to fit.

Unicode 統一碼最簡單的編碼是 UTF-32。它只是將碼位儲存為 32 位整數。因此,U+1F4A9 變為 00 01 F4 A9,佔用四個位元組。UTF-32 中的任何其他碼位也將佔用四個位元組。由於最高定義的碼位是 U+10FFFF,因此可以保證任何碼位都適合。

UTF-16 and UTF-8 are less straightforward, but the ultimate goal is the same: to take a code point and encode it as bytes.

UTF-16 和 UTF-8 不那麼直接,但最終目標是相同的:將碼位作為位元組進行編碼。

Encoding is what you’ll actually deal with as a programmer.

作為程式設計師,編碼是你實際處理的內容。

How many bytes are in UTF-8?

UTF-8 中有多少位元組?

UTF-8 is a variable-length encoding. A code point might be encoded as a sequence of one to four bytes.

UTF-8 是一種變長編碼。碼位可能被編碼為一個到四個位元組的序列。

This is how it works:

這是它工作的方式:

| Code point | Byte 1 | Byte 2 | Byte 3 | Byte 4 |

|---|---|---|---|---|

| 碼位 | 第 1 位元組 | 第 2 位元組 | 第 3 位元組 | 第 4 位元組 |

U+ | 0xxxxxxx | |||

U+ | 110xxxxx | 10xxxxxx | ||

U+ | 1110xxxx | 10xxxxxx | 10xxxxxx | |

U+ | 11110xxx | 10xxxxxx | 10xxxxxx | 10xxxxxx |

If you combine this with the Unicode table, you’ll see that English is encoded with 1 byte, Cyrillic, Latin European languages, Hebrew and Arabic need 2, and Chinese, Japanese, Korean, other Asian languages, and Emoji need 3 or 4.

將此與 Unicode 統一碼表結合起來,就可以看到英語使用 1 個位元組進行編碼,西里爾語、拉丁語、希伯來語和阿拉伯語需要 2 個位元組,中文、日文、韓文、其他亞洲語言和 Emoji 需要 3 個或 4 個位元組。

A few important points here:

這裡有幾個要點:

First, UTF-8 is byte-compatible with ASCII. The code points 0..127, the former ASCII, are encoded with one byte, and it’s the same exact byte. U+0041 (A, Latin Capital Letter A) is just 41, one byte.

第一,UTF-8 與 ASCII 相容。碼位 0..127,即 ASCII,使用一個位元組進行編碼,而且是完全相同的位元組。U+0041 (A,拉丁大寫字母 A) 只是 41,一個位元組。

Any pure ASCII text is also a valid UTF-8 text, and any UTF-8 text that only uses codepoints 0..127 can be read as ASCII directly.

任何純 ASCII 文字也是有效的 UTF-8 文字,任何只使用碼位 0..127 的 UTF-8 文字都可以直接讀取為 ASCII。

Second, UTF-8 is space-efficient for basic Latin. That was one of its main selling points over UTF-16. It might not be fair for texts all over the world, but for technical strings like HTML tags or JSON keys, it makes sense.

第二,UTF-8 對於基本拉丁語來說可以節省空間。這是它比 UTF-16 的主要賣點之一。對於世界各地的文字來說可能不公平,但對於 HTML 標籤或 JSON 鍵等技術字串來說是有意義的。

On average, UTF-8 tends to be a pretty good deal, even for non-English computers. And for English, there’s no comparison.

平均而言,UTF-8 往往是一個相當不錯的選擇,即使對於使用非英語的計算機也是如此。而對於英語而言,沒有比它更好的選擇了。

Third, UTF-8 has error detection and recovery built-in. The first byte’s prefix always looks different from bytes 2-4. This way you can always tell if you are looking at a complete and valid sequence of UTF-8 bytes or if something is missing (for example, you jumped it the middle of the sequence). Then you can correct that by moving forward or backward until you find the beginning of the correct sequence.

第三,UTF-8 自帶錯誤檢測和錯誤恢復的功能。第一個位元組的字首總是與第 2-4 個位元組不同,因而你總是可以判斷你是否正在檢視完整且有效的 UTF-8 位元組序列,或者是否缺少某些內容(例如,你跳到了序列的中間)。然後你就可以透過向前或向後移動,直到找到正確序列的開頭來糾正它。

And a couple of important consequences:

這帶來了一些重要的結論:

- You CAN’T determine the length of the string by counting bytes.

- 你不能透過計數字節來確定字串的長度。

- You CAN’T randomly jump into the middle of the string and start reading.

- 你不能隨機跳到字串的中間並開始讀取。

- You CAN’T get a substring by cutting at arbitrary byte offsets. You might cut off part of the character.

- 你不能透過在任意位元組偏移處切割來獲取子字串。你可能會切掉字元的一部分。

Those who do will eventually meet this bad boy: �

試圖這樣做的人最終會遇到這個壞小子:�

What’s �?

� 是什麼?

U+FFFD, the Replacement Character, is simply another code point in the Unicode table. Apps and libraries can use it when they detect Unicode errors.

U+FFFD,替換字元,只是 Unicode 統一碼表中的另一個碼位。當應用程式和庫檢測到 Unicode 統一碼錯誤時,它們可以使用它。

If you cut half of the code point off, there’s not much left to do with the other half, except displaying an error. That’s when � is used.

如果你切掉了碼位的一半,那就沒有什麼其他辦法,只能顯示錯誤了。這就是使用 � 的時候。

var bytes = "Аналитика".getBytes("UTF-8");

var partial = Arrays.copyOfRange(bytes, 0, 11);

new String(partial, "UTF-8"); // => "Анал�"Wouldn’t UTF-32 be easier for everything?

使用 UTF-32 不會讓一切變得更容易嗎?

NO.

不會。

UTF-32 is great for operating on code points. Indeed, if every code point is always 4 bytes, then strlen(s) == sizeof(s) / 4, substring(0, 3) == bytes[0, 12], etc.

UTF-32 對於操作碼位很棒。確實,如果每個碼位總是 4 個位元組,那麼 strlen(s) == sizeof(s) / 4,substring(0, 3) == bytes[0, 12],等等。

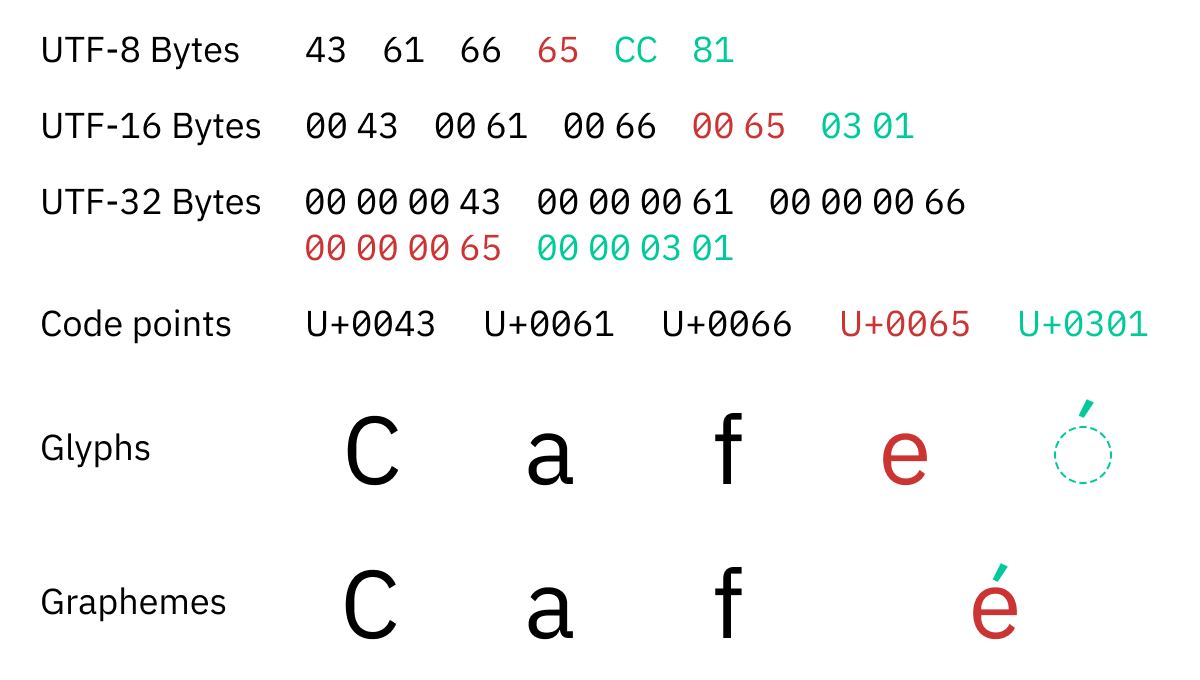

The problem is, you don’t want to operate on code points. A code point is not a unit of writing; one code point is not always a single character. What you should be iterating on is called “extended grapheme clusters”, or graphemes for short.

問題是,你想操作的並非碼位。碼位不是書寫的單位;一個碼位不總是一個字元。你應該迭代的是叫做「擴充字位簇(extended grapheme cluster)」的東西,我們在這裡簡稱字位。

A grapheme is a minimally distinctive unit of writing in the context of a particular writing system. ö is one grapheme. é is one too. And 각. Basically, grapheme is what the user thinks of as a single character.

字位(grapheme,或譯作字素)2是在特定書寫系統的上下文中最小的可區分的書寫單位。ö 是一個字位。é、각 也是。基本上,字位是使用者認為是單個字元的東西。

The problem is, in Unicode, some graphemes are encoded with multiple code points!

問題是,在 Unicode 統一碼中,一些字位使用多個碼位進行編碼!

For example, é (a single grapheme) is encoded in Unicode as e (U+0065 Latin Small Letter E) + ´ (U+0301 Combining Acute Accent). Two code points!

比如說,é(一個單獨的字位)在 Unicode 統一碼中被編碼為 e(U+0065 拉丁小寫字母 E)+ ´(U+0301 連線重音符)。兩個碼位!

It can also be more than two:

它也可以是兩個以上:

U+2639+U+FE0FU+2639+U+FE0FU+1F468+U+200D+U+1F3EDU+1F468+U+200D+U+1F3EDU+1F6B5+U+1F3FB+U+200D+U+2640+U+FE0FU+1F6B5+U+1F3FB+U+200D+U+2640+U+FE0F- y̖̠͍̘͇͗̏̽̎͞ is

U+0079+U+0316+U+0320+U+034D+U+0318+U+0347+U+0357+U+030F+U+033D+U+030E+U+035E - y̖̠͍̘͇͗̏̽̎͞ 是

U+0079+U+0316+U+0320+U+034D+U+0318+U+0347+U+0357+U+030F+U+033D+U+030E+U+035E

There’s no limit, as far as I know.

據我所知,沒有限制。

Remember, we are talking about code points here. Even in the widest encoding, UTF-32,

記住,我們在這裡談論的是碼位。即使在最寬的編碼 UTF-32 中,

If the analogy helps, we can think of the Unicode itself (without any encodings) as being variable-length.

如果打個比方能幫你理解的話,可以把 Unicode 統一碼本身(拋開任何編碼方式)看作是變長的。

An Extended Grapheme Cluster is a sequence of one or more Unicode code points that must be treated as a single, unbreakable character.

一個擴充字位簇是一個或多個 Unicode 統一碼碼位的序列,必須被視為一個單獨的、不可分割的字元。

Therefore, we get all the problems we have with variable-length encodings, but now on code point level: you can’t take only a part of the sequence, it always should be selected, copied, edited, or deleted as a whole.

因此,我們會遇到所有變長編碼的問題,但現在是在碼位級別上:你不能只取序列的一部分——它總是應該作為一個整體被選擇、複製、編輯或刪除。

Failure to respect grapheme clusters leads to bugs like this:

不尊重字位簇會導致像這樣的錯誤:

or this:

或者這樣:

Using UTF-32 instead of UTF-8 will not make your life any easier in regards to extended grapheme clusters. And extended grapheme clusters is what you should care about.

就擴充字位簇而言,用 UTF-32 代替 UTF-8 不會讓你的生活變得更容易。而擴充字位簇才是你應該關心的。

Code points — 🥱. Graphemes — 😍

碼位 —

Is Unicode hard only because of emojis?

Unicode 統一碼之所以難,僅僅是因為表情符號嗎?

Not really. Extended Grapheme Clusters are also used for alive, actively used languages. For example:

並不。沒有消亡的、活躍使用的語言也使用擴充字位簇。例如:

- ö (German) is a single character, but multiple code points (

U+006F U+0308). - ö (德語) 是一個單獨的字元,但是多個碼位(

U+006F U+0308)。 - ą́ (Lithuanian) is

U+00E1 U+0328. - ą́ (立陶宛語) 是

U+00E1 U+0328。 - 각 (Korean) is

U+1100 U+1161 U+11A8. - 각 (韓語) 是

U+1100 U+1161 U+11A8。

So no, it’s not just about emojis.

所以,不,這不僅僅是關於表情符號。

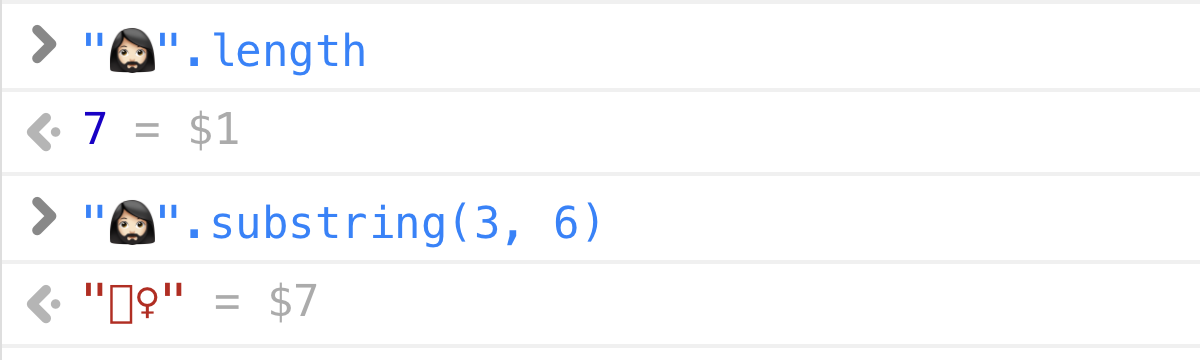

What’s " ".length

".length?

".length

".length" ".length

".length 是什麼?

".length

".lengthThe question is inspired by this brilliant article.

這個問題的靈感來自於這篇精彩的文章。

Different programming languages will happily give you different answers.

不同的程式語言很樂意給你不同的答案。

Python 3:

>>> len("🤦🏼♂️")

5JavaScript / Java / C#:

>> "🤦🏼♂️".length

7Rust:

println!("{}", "🤦🏼♂️".len());

// => 17As you can guess, different languages use different internal string representations (UTF-32, UTF-16, UTF-8) and report length in whatever units they store characters in (ints, shorts, bytes).

如你所料,不同的語言使用不同的內部字串表示(UTF-32、UTF-16、UTF-8),並以它們儲存字元的任何單位報告長度(int、short、byte)。

BUT! If you ask any normal person, one that isn’t burdened with computer internals, they’ll give you a straight answer: 1. The length of

但是!如果你問任何正常的人,一個不被計算機內部所拖累的人,他們會給你一個直接的答案:1。

That’s what extended grapheme clusters are all about: what humans perceive as a single character. And in this case,

這就是擴充字位簇存在的意義:人們認為是單個字元。在這種情況下,

The fact that U+1F926 U+1F3FB U+200D U+2642 U+FE0F) is mere implementation detail. It should not be broken apart, it should not be counted as multiple characters, the text cursor should not be positioned inside it, it shouldn’t be partially selected, etc.

U+1F926 U+1F3FB U+200D U+2642 U+FE0F)的事實只是實現細節。它不應該被分開,它不應該被計算為多個字元,文字游標不應該被定位在它的內部,它不應該被部分選擇,等等。

For all intents and purposes, this is an atomic unit of text. Internally, it could be encoded whatever, but for user-facing API, it should be treated as a whole.

實際上,這是一個文字的原子單位。在內部,它可以被編碼為任何東西,但對於面向使用者的 API,它應該被視為一個整體。

The only modern language that gets it right is Swift:

唯一沒弄錯這件事的現代語言是 Swift:

print("🤦🏼♂️".count)

// => 1Basically, there are two layers:

基本上,有兩層:

- Internal, computer-oriented. How to copy strings, send them over the network, store on disk, etc. This is where you need encodings like UTF-8. Swift uses UTF-8 internally, but it might as well be UTF-16 or UTF-32. What's important is that you only use it to copy strings as a whole and never to analyze their content.

- 內部,面向計算機的一層。如何複製字串、透過網路傳送字串、儲存在磁碟上等。這就是你需要 UTF-8 這樣的編碼的地方。Swift 在內部使用 UTF-8,但也可以是 UTF-16 或 UTF-32。重要的是,你只使用它來整體複製字串,而不是分析它們的內容。

- External, human-facing API. Character count in UI. Taking first 10 characters to generate preview. Searching in text. Methods like

.countor.substring. Swift gives you a view that pretends the string is a sequence of grapheme clusters. And that view behaves like any human would expect: it gives you 1 for".".count

- 外部,面向人類的 API 一層。UI 中的字數統計。獲取前 10 個字元以生成預覽。在文字中搜尋。像

.count或.substring這樣的方法。Swift 給你一個檢視,假裝字串是一個字位簇序列。這個檢視的行為就像任何人所期望的那樣:它為"給出 1。".count

I hope more languages adopt this design soon.

我希望更多的語言儘快採用這種設計。

Question to the reader: what to you think "ẇ͓̞͒͟͡ǫ̠̠̉̏͠͡ͅr̬̺͚̍͛̔͒͢d̠͎̗̳͇͆̋̊͂͐".length should be?

給讀者的問題:你認為 "ẇ͓̞͒͟͡ǫ̠̠̉̏͠͡ͅr̬̺͚̍͛̔͒͢d̠͎̗̳͇͆̋̊͂͐".length 應該是什麼?

How do I detect extended grapheme clusters then?

如何檢測擴充字位簇?

Unfortunately, most languages choose the easy way out and let you iterate through strings with 1-2-4-byte chunks, but not with grapheme clusters.

不幸的是,大多數語言都選擇了簡單的方法,讓你透過 1-2-4 位元組塊迭代字串,而不是透過字位簇。

It makes no sense and has no semantics, but since it’s the default, programmers don’t think twice, and we see corrupted strings as the result:

這沒有意義,也不合語義,但由於它是預設值,程式設計師不會再考慮,我們看到的結果是損壞的字串:

“I know, I’ll use a library to do strlen()!” — nobody, ever.

「我知道,我會使用一個庫來做 strlen()!」——從來沒有人這樣想。

But that’s exactly what you should be doing! Use a proper Unicode library! Yes, for basic stuff like strlen or indexOf or substring!

但這正是你應該做的!使用一個合適的 Unicode 統一碼庫!是的,對於像 strlen 或 indexOf 或 substring 這樣的基本功能!

For example:

例如:

- C/C++/Java: use ICU. It’s a library from Unicode itself that encodes all the rules about text segmentation.

- C/C++/Java: 使用 ICU。它是一個來自 Unicode 統一碼自身的庫,它對文字分割的所有規則進行編碼。

- C#: use

TextElementEnumerator, which is kept up to date with Unicode as far as I can tell. - C#: 使用

TextElementEnumerator,據我所知,它與 Unicode 統一碼保持最新。 - Swift: just stdlib. Swift does the right thing by default.

- Swift: 標準庫就行。Swift 預設就做得很好。

- UPD: Erlang/Elixir seem to be doing the right thing, too.

- 更新:Erlang/Elixir 似乎也做得很好。

- For other languages, there’s probably a library or binding for ICU.

- 對於其他語言,可能有一個 ICU 的庫或繫結。

- Roll your own. Unicode publishes rules and tables in a machine-readable format, and all the libraries above are based on them.

- 自己動手。Unicode 統一碼釋出了機器可讀的規則和表格,上面的所有庫都是基於它們的。

But whatever you choose, make sure it’s on the recent enough version of Unicode (15.1 at the moment of writing), because the definition of graphemes changes from version to version. For example, Java’s java.text.BreakIterator is a no-go: it’s based on a very old version of Unicode and not updated.

不過無論你選哪個,都要確保它是最近的 Unicode 統一碼版本(目前是 15.1),因為字位簇的定義會隨著版本的變化而變化。例如,Java 的 java.text.BreakIterator 是不行的:它是基於一個非常舊的 Unicode 統一碼版本,而且沒有更新。

Use a library

用個庫

IMO, the whole situation is a shame. Unicode should be in the stdlib of every language by default. It’s the lingua franca of the internet! It’s not even new: we’ve been living with Unicode for 20 years now.

我覺得,整個情況都令人遺憾。Unicode 統一碼應該是每種語言的標準庫。這是網際網路的通用語言!它甚至不是什麼新鮮玩意兒:我們已經與 Unicode 統一碼生活了 20 年了。

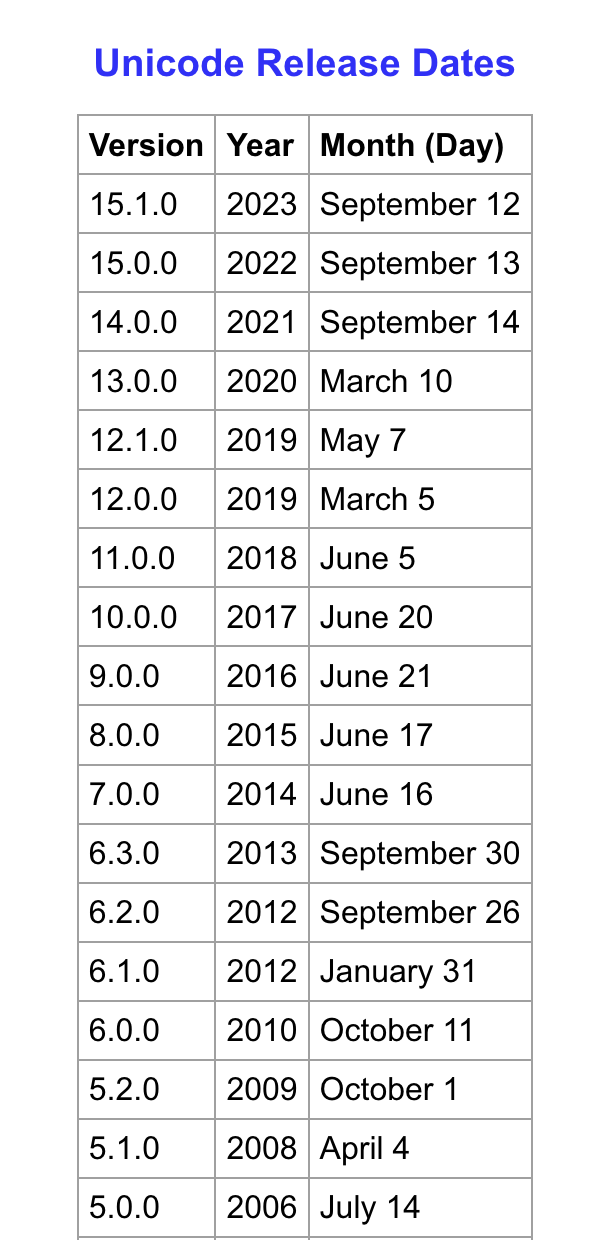

Wait, rules are changing?

等下,規則一直變化?

Yes! Ain’t it cool?

是的!很酷吧?

(I know, it ain’t)

(我知道,這並不)

Starting roughly in 2014, Unicode has been releasing a major revision of their standard every year. This is where you get your new emojis from — Android and iOS updates in the Fall usually include the newest Unicode standard among other things.

大概從 2014 年開始,Unicode 統一碼每年都會發布一次主要修訂版。這就是你獲得新的 emoji 的地方——Android 和 iOS 的更新通常包括最新的 Unicode 統一碼標準。

What’s sad for us is that the rules defining grapheme clusters change every year as well. What is considered a sequence of two or three separate code points today might become a grapheme cluster tomorrow! There’s no way to know! Or prepare!

對我們來說可悲的是定義字位簇的規則也在每年變化。今天被認為是兩個或三個單獨碼位的序列,明天可能就成為字位簇!我們無從得知,沒法準備!

Even worse, different versions of your own app might be running on different Unicode standards and report different string lengths!

更糟糕的是,你自己的應用程式的不同版本可能在不同的 Unicode 統一碼標準上執行,並給出不同的字串長度!

But that’s the reality we live in. You don’t really have a choice here. You can’t ignore Unicode or Unicode updates if you want to stay relevant and provide a decent user experience. So, buckle up, embrace, and update.

但這就是我們所生活的現實——你實際上別無選擇。如果你想站穩腳跟並提供良好的使用者體驗,就不能忽略 Unicode 統一碼或 Unicode 統一碼更新。所以,寄好安全帶,擁抱更新。

Update yearly

每年更新

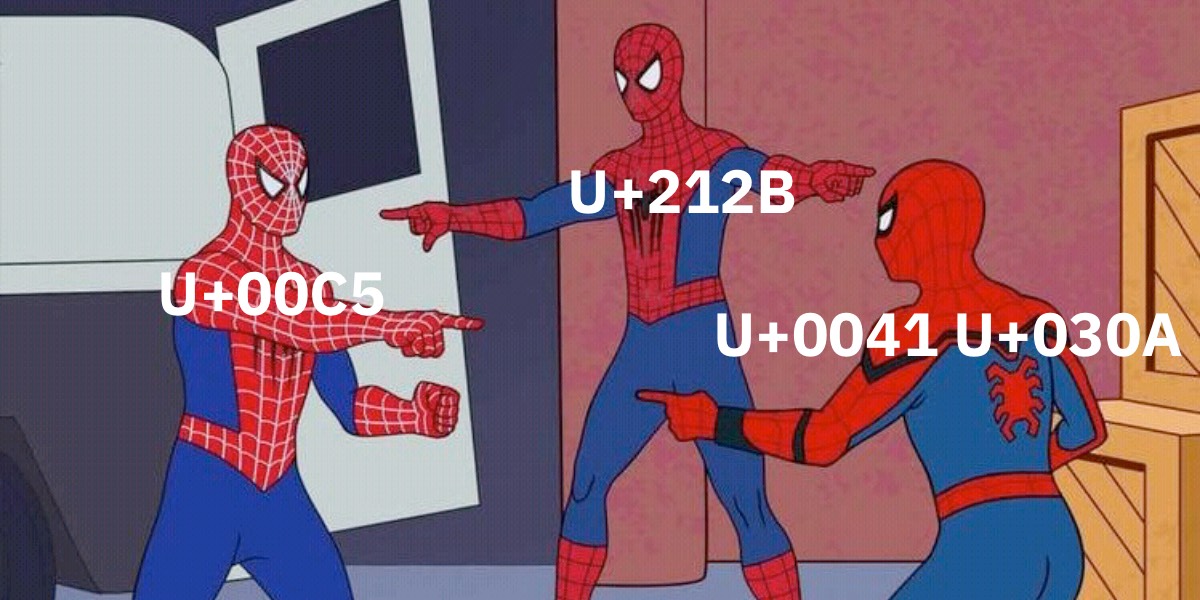

Why is "Å" !== "Å" !== "Å"?

為什麼 "Å" !== "Å" !== "Å"?

Copy any of these to your JavaScript console:

請將下面任何一行復制到你的 JavaScript 控制檯:

"Å" === "Å";

"Å" === "Å";

"Å" === "Å";What do you get? False? You should get false, and it’s not a mistake.

你得到了什麼?False?確實是 false,並且這不是一個錯誤。

Remember earlier when I said that ö is two code points, U+006F U+0308? Basically, Unicode offers more than one way to write characters like ö or Å. You can:

還記得我之前說過 ö 是兩個碼位,U+006F U+0308 嗎?基本上,Unicode 統一碼提供了多種寫法,比如 ö 或 Å。你可以:

- Compose

Åfrom normal LatinA+ a combining character, - 從普通拉丁字母

A+ 一個連線字元組合出Å, - OR there’s also a pre-composed code point

U+00C5that does that for you. - 或者還有一個預組合的碼位

U+00C5幫你做了這件事。

They will look the same (Å vs Å), they should work the same, and for all intents and purposes, they are considered exactly the same. The only difference is the byte representation.

他們將會看起來一樣(Å vs Å),它們應該用起來一樣,並且它們實際上在方方面面都被視為完全一樣。唯一的區別是位元組表示。

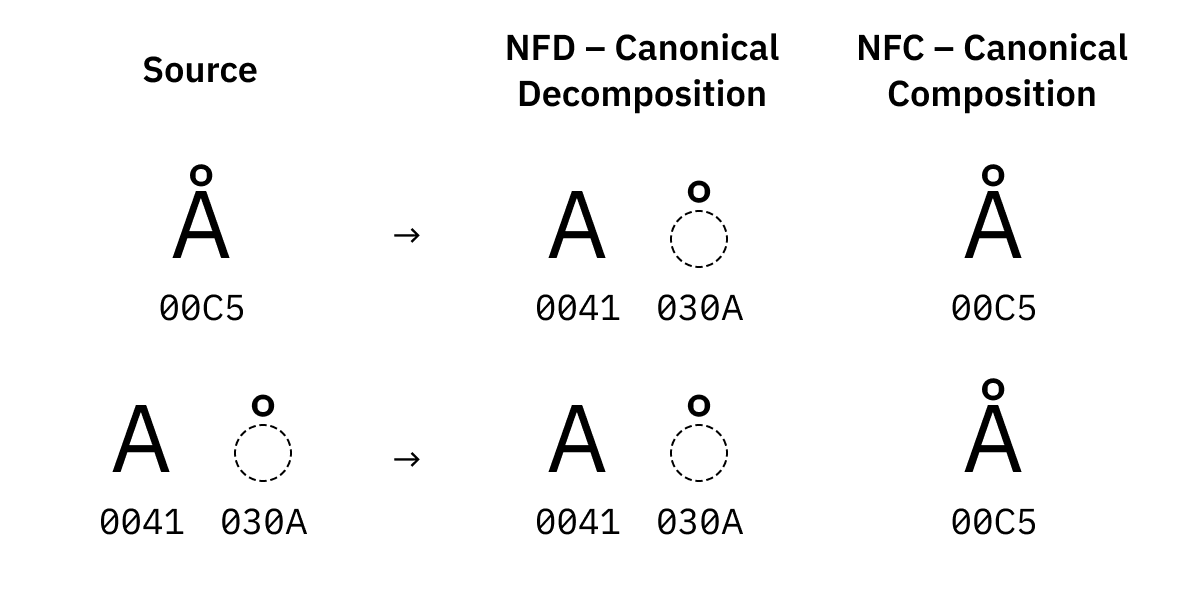

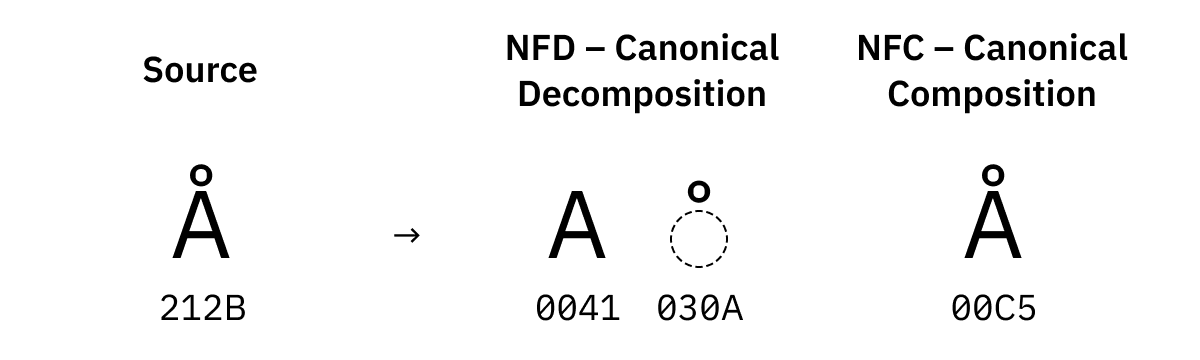

That’s why we need normalization. There are four forms:

這就是我們需要歸一化的原因。有四種形式:

NFD tries to explode everything to the smallest possible pieces, and also sorts pieces in a canonical order if there is more than one.

NFD 嘗試將所有東西都分解為最小的可能部分,並且如果有多個部分,則按照規範順序對部分進行排序。

NFC, on the other hand, tries to combine everything into pre-composed form if one exists.

NFC,另一方面,嘗試將所有東西組合成存在的預組合形式。

For some characters there are also multiple versions of them in Unicode. For example, there’s U+00C5 Latin Capital Letter A with Ring Above, but there’s also U+212B Angstrom Sign which looks the same.

對於某些字元,它們在 Unicode 統一碼中也有多個版本。例如,有 U+00C5 Latin Capital Letter A with Ring Above,但也有 U+212B Angstrom Sign,它看起來是一樣的。

These are also replaced during normalization:

這些也在歸一化過程中被替換掉了:

NFD and NFC are called “canonical normalization”. Another two forms are “compatibility normalization”:

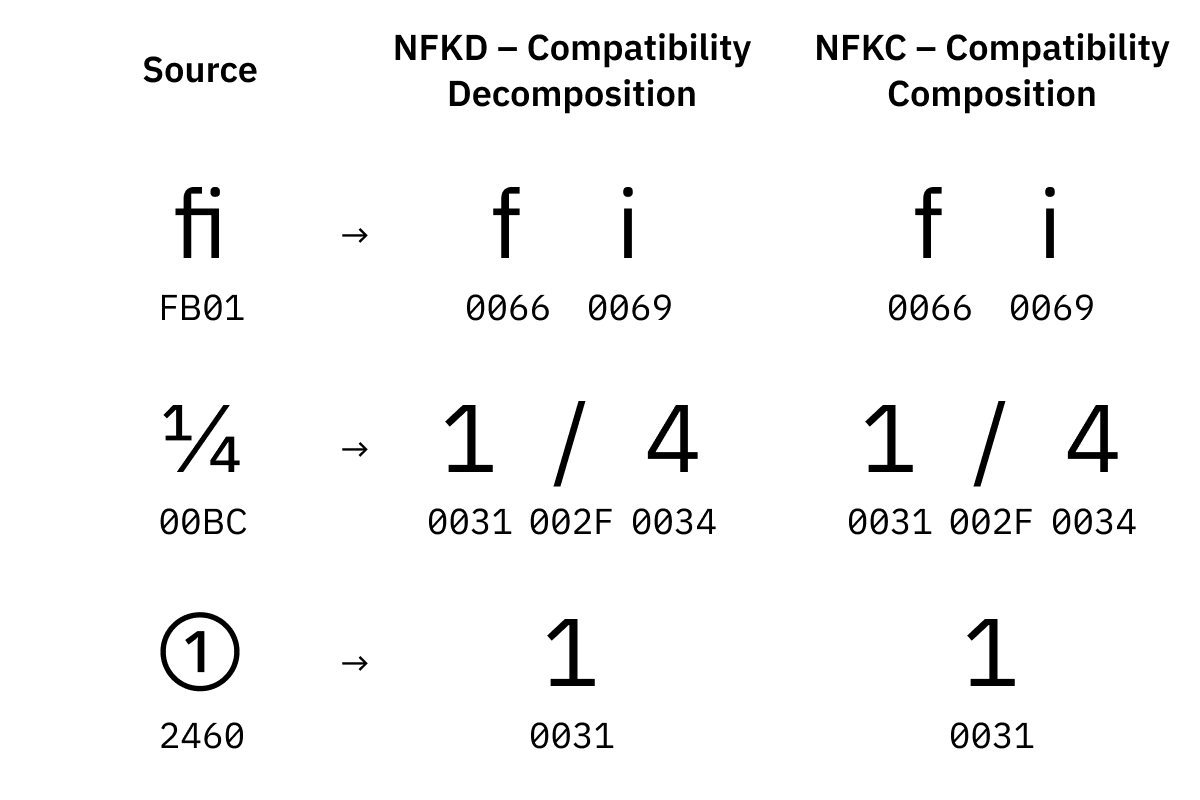

NFD 和 NFC 被稱為「規範歸一化」。另外兩種形式是「相容歸一化」:

NFKD tries to explode everything and replaces visual variants with default ones.

NFKD 嘗試將所有東西分解開來,並用預設的替換視覺變體。

NFKC tries to combine everything while replacing visual variants with default ones.

NFKC 嘗試將所有東西組合起來,同時用預設的替換視覺變體。

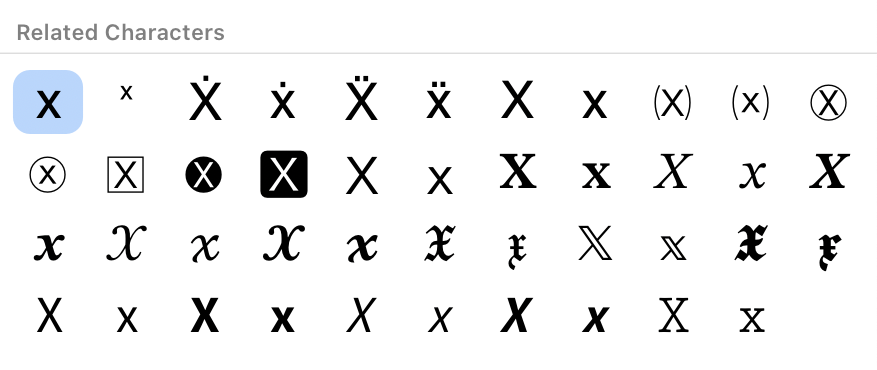

Visual variants are separate Unicode code points that represent the same character but are supposed to render differently. Like, ① or ⁹ or 𝕏. We want to be able to find both "x" and "2" in a string like "𝕏²", don’t we?

視覺變體是表示相同字元的單獨的 Unicode 統一碼碼位,但是應該呈現不同。比如 ① 或 ⁹ 或 𝕏。我們想要在像 "𝕏²" 這樣的字串中找到 "x" 和 "2",不是嗎?

Why does the fi ligature even have its own code point? No idea. A lot can happen in a million characters.

為什麼連 fi 這個連字都有它自己的碼位?不知道。在一百萬個字元中,很多事情都可能發生。

Before comparing strings or searching for a substring, normalize!

在比較字串或搜尋子字串之前,歸一化!

Unicode is locale-dependent

Unicode 統一碼是基於區域設定的

The Russian name Nikolay is written like this:

俄語名字 Nikolay 的寫法如下:

and encoded in Unicode as U+041D 0438 043A 043E 043B 0430 0439.

並且在 Unicode 統一碼中編碼為 U+041D 0438 043A 043E 043B 0430 0439。

The Bulgarian name Nikolay is written:

保加利亞語名字 Nikolay 的寫法如下:

and encoded in Unicode as U+041D 0438 043A 043E 043B 0430 0439. Exactly the same!

並且在 Unicode 統一碼中編碼為 U+041D 0438 043A 043E 043B 0430 0439。完全一樣!

Wait a second! How does the computer know when to render Bulgarian-style glyphs and when to use Russian ones?

等一下!計算機如何知道何時呈現保加利亞式字形,何時使用俄語字形?

Short answer: it doesn’t. Unfortunately, Unicode is not a perfect system, and it has many shortcomings. Among them is assigning the same code point to glyphs that are supposed to look differently, like Cyrillic Lowercase K and Bulgarian Lowercase K (both are U+043A).

簡短的回答:它不知道。不幸的是,Unicode 統一碼不是一個完美的系統,它有很多缺點。其中之一就是是將相同的碼位分配給應該看起來不同的字形,比如西里爾小寫字母 K 和保加利亞語小寫字母 K(都是 U+043A)。

From what I understand, Asian people get it much worse: many Chinese, Japanese, and Korean logograms that are written very differently get assigned the same code point:

據我所知,亞洲人遭受的打擊更大:許多中文、日文和韓文的象形文字被分配了相同的碼位:

Unicode motivation is to save code points space (my guess). Information on how to render is supposed to be transferred outside of the string, as locale/language metadata.

Unicode 統一碼這麼做是出於節省碼位空間的動機(我猜的)。渲染資訊應該在字串之外傳遞,作為區域設定(locale)/語言的後設資料。

Unfortunately, it fails the original goal of Unicode:

不幸的是,它未能實現 Unicode 統一碼最初的目標:

[...] no escape sequence or control code is required to specify any character in any language.

[...] 不需要轉義序列或控制碼來指定任何語言中的任何字元。

In practice, dependency on locale brings a lot of problems:

在實際中,對區域設定的依賴帶來了很多問題:

- Being metadata, locale often gets lost.

- 作為後設資料,區域設定經常丟失。

- People are not limited to a single locale. For example, I can read and write English (USA), English (UK), German, and Russian. Which locale should I set my computer to?

- 人們不限於單一的區域設定。例如,我可以閱讀和寫作英語(美國)、英語(英國)、德語和俄語。我應該將我的計算機設定為哪個區域?

- It’s hard to mix and match. Like Russian names in Bulgarian text or vice versa. Why not? It’s the internet, people from all cultures hang out here.

- 混起來後再匹配很難。比如保加利亞文中的俄語名字,反之亦然。這種情況不是時有發生嗎?這是網際網路,來自各種文化的人都在這裡衝浪。

- There’s no place to specify the locale. Even making the two screenshots above was non-trivial because in most software, there’s no dropdown or text input to change locale.

- 沒有地方指定區域設定。即使是製作上面的兩個截圖也是比較複雜的,因為在大多數軟體中,沒有下拉選單或文字輸入來更改區域設定。

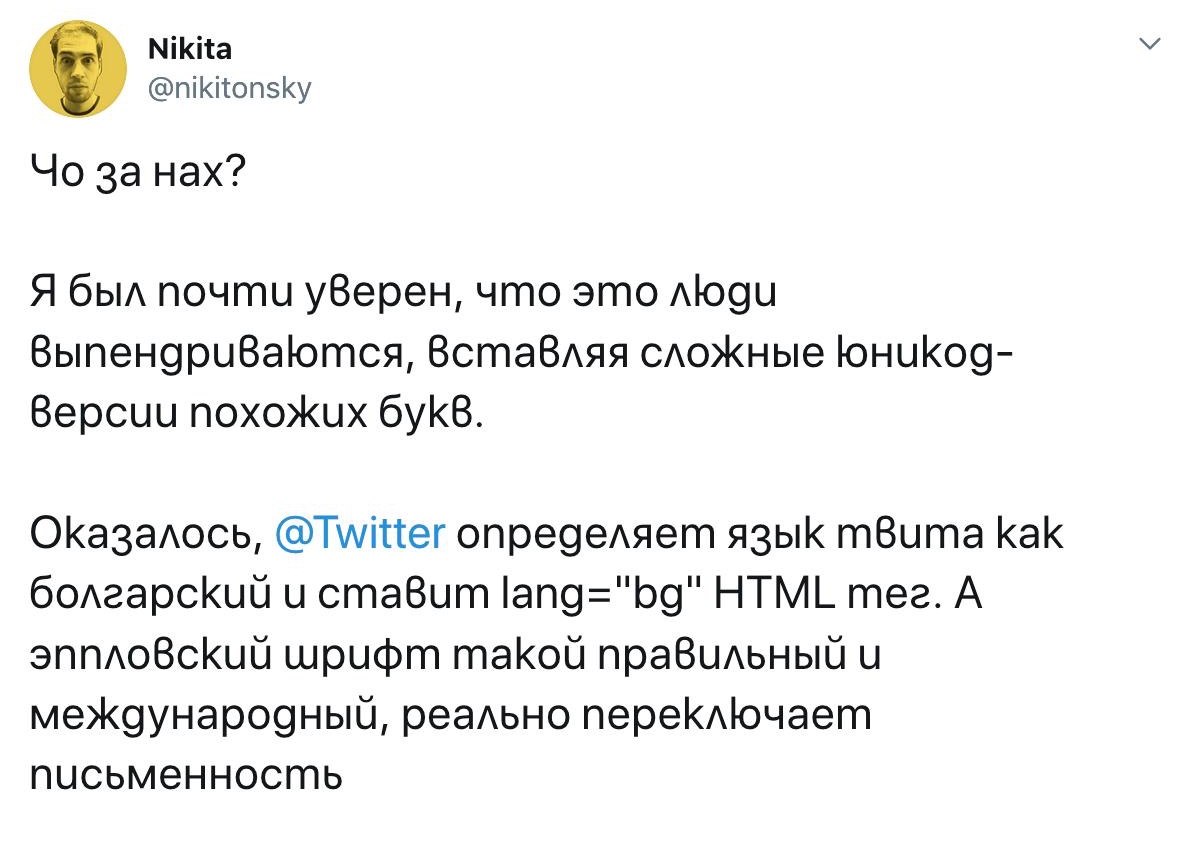

- When needed, it had to be guessed. For example, Twitter tries to guess the locale from the text of the tweet itself (because where else could it get it from?) and sometimes gets it wrong:

- 在需要的時候,我們只能靠猜。例如,Twitter 試圖從推文字身的文字中猜測區域設定(因為它還能從哪裡得到呢?)時有時會猜錯3:

Why does String::toLowerCase() accepts Locale as an argument?

為什麼 String::toLowerCase() 的引數中有個區域設定?

Another unfortunate example of locale dependence is the Unicode handling of dotless i in the Turkish language.

Unicode 統一碼處理土耳其語中無點 i 的方式是說明其對區域設定依賴的另一個例子。

Unlike English, Turks have two I variants: dotted and dotless. Unicode decided to reuse I and i from ASCII and only add two new code points: İ and ı.

不同於英國人,土耳其人有兩種 I 變體:有點的和無點的。Unicode 統一碼決定重用 ASCII 中的 I 和 i,並只新增兩個新的碼位:İ 和 ı。

Unfortunately, that made toLowerCase/toUpperCase behave differently on the same input:

不幸的是,這使得 toLowerCase/toUpperCase 在相同的輸入上表現不同:

var en_US = Locale.of("en", "US");

var tr = Locale.of("tr");

"I".toLowerCase(en_US); // => "i"

"I".toLowerCase(tr); // => "ı"

"i".toUpperCase(en_US); // => "I"

"i".toUpperCase(tr); // => "İ"'So no, you can’t convert string to lowercase without knowing what language that string is written in.

所以,不,你不能在不知道字串是用什麼語言編寫的情況下將字串轉換為小寫。

I live in the US/UK, should I even care?

我住在美國/英國,也應該在意這件事嗎?

依然應該。即使是純英文文字也使用了許多 ASCII 中沒有的「排版符號」,比如:

- quotation marks

“”‘’, - 引號

“”‘’, - apostrophe

’, - 撇號

’, - dashes

–—, - 連線號

–—, - different variations of spaces (figure, hair, non-breaking),

- 空格的變體(長空格、短空格、不換行空格),

- bullets

•■☞, - 點

•■☞, - currency symbols other than the

$(kind of tells you who invented computers, doesn’t it?):€¢£, - 除了

$之外的貨幣符號(這有點告訴你是誰發明了計算機,不是嗎?):€¢£, - mathematical signs—plus

+and equals=are part of ASCII, but minus−and multiply×are not¯_(ツ)_/¯ , - 數學符號——加號

+和等號=是 ASCII 的一部分,但減號−和乘號×不是¯_(ツ)_/¯ , - various other signs

¶†§. - 各種其他符號

¶†§。

Hell, you can’t even spell café, piñata, or naïve without Unicode. So yes, we are all in it together, even Americans.

見鬼,不用 Unicode統一碼,你甚至拼寫不了 café、piñata

或 naïve。所以是的,我們同舟共濟,即使是美國人。

Touché.

法國人:你書的隊。4

What are surrogate pairs?

什麼是代理對?

That goes back to Unicode v1. The first version of Unicode was supposed to be fixed-width. A 16-bit fixed width, to be exact:

這要追溯到 Unicode 統一碼v1。Unicode 統一碼的第一個版本應該是固定寬度的。準確地說,是 16 位固定寬度:

They believed 65,536 characters would be enough for all human languages. They were almost right!

他們相信 65,536 個字元足以涵蓋所有人類語言。他們幾乎是對的!

When they realized they needed more code points, UCS-2 (an original version of UTF-16 without surrogates) was already used in many systems. 16 bit, fixed-width, it only gives you 65,536 characters. What can you do?

當他們意識到他們需要更多的碼位時,UCS-2(沒有代理對的 UTF-16 的原始版本)已經在許多系統中使用了。16 位,固定寬度,只給你 65,536 個字元。你能做什麼呢?

Unicode decided to allocate some of these 65,536 characters to encode higher code points, essentially converting fixed-width UCS-2 into variable-width UTF-16.

Unicode 統一碼決定將這 65,536 個字元中的一些分配給編碼更高碼位的字元,從而將固定寬度的 UCS-2 轉換為可變寬度的 UTF-16。

A surrogate pair is two UTF-16 units used to encode a single Unicode code point. For example, D83D DCA9 (two 16-bit units) encodes one code point, U+1F4A9.

代理對(surrogate pair)是用於編碼單個 Unicode 統一碼碼位的兩個 UTF-16 單位。例如,D83D DCA9(兩個 16 位單位)編碼了一個碼位,U+1F4A9。

The top 6 bits in surrogate pairs are used for the mask, leaving 2×10 free bits to spare:

代理對中的前 6 位用於掩碼,剩下 2×10 個空閒位:

High Surrogate Low Surrogate D800 ++ DC00 1101 10?? ???? ???? ++ 1101 11?? ???? ????

Technically, both halves of the surrogate pair can be seen as Unicode code points, too. In practice, the whole range from U+D800 to U+DFFF is allocated as “for surrogate pairs only”. Code points from there are not even considered valid in any other encodings.

從技術上講,代理對的兩半也可以看作是 Unicode 統一碼碼位。實際上,從 U+D800 到 U+DFFF 的整個範圍都被分配為「僅用於代理對」。從那裡開始的碼位甚至在任何其他編碼中都不被認為是有效的。

Is UTF-16 still alive?

UTF-16 還活著嗎?

Yes!

是的!

The promise of a fixed-width encoding that covers all human languages was so compelling that many systems were eager to adopt it. Among them were Microsoft Windows, Objective-C, Java, JavaScript, .NET, Python 2, QT, SMS, and CD-ROM!

一個定長的、涵蓋所有人類語言的編碼的許諾是如此令人信服,以至於許多系統都迫不及待地採用了它。例如,Microsoft Windows、Objective-C、Java、JavaScript、.NET、Python 2、QT、簡訊,還有 CD-ROM!

Since then, Python has moved on, CD-ROM has become obsolete, but the rest is stuck with UTF-16 or even UCS-2. So UTF-16 lives there as in-memory representation.

自從那時以來,Python 已經進步了,CD-ROM 已經過時了,但其餘的仍然停留在 UTF-16 甚至 UCS-2。因此,UTF-16 作為記憶體表示而存在。

In practical terms today, UTF-16 has roughly the same usability as UTF-8. It’s also variable-length; counting UTF-16 units is as useless as counting bytes or code points, grapheme clusters are still a pain, etc. The only difference is memory requirements.

在今天的實際情況下,UTF-16 的可用性與 UTF-8 大致相同。它也是變長的;計算 UTF-16 單元與計算位元組或碼位一樣沒有用,字位簇仍然很痛苦,等等。唯一的區別是記憶體需求。

The only downside of UTF-16 is that everything else is UTF-8, so it requires conversion every time a string is read from the network or from disk.

UTF-16 的唯一缺點是其他所有東西都是 UTF-8,因此每次從網路或磁碟讀取字串時都要轉換一下。

Also, fun fact: the number of planes Unicode has (17) is defined by how much you can express with surrogate pairs in UTF-16.

還有一個有趣的事實:Unicode 統一碼的平面數(17)是由 UTF-16 中代理對可以表達的內容決定的。

Conclusion

結論

To sum it up:

讓我們總結一下:

- Unicode has won.

- Unicode 統一碼已經贏了。

- UTF-8 is the most popular encoding for data in transfer and at rest.

- UTF-8 是傳輸和儲存資料時使用最廣泛的編碼。

- UTF-16 is still sometimes used as an in-memory representation.

- UTF-16 仍然有時被用作記憶體表示。

- The two most important views for strings are bytes (allocate memory/copy/encode/decode) and extended grapheme clusters (all semantic operations).

- 字串的兩個最重要的檢視是位元組(分配記憶體/複製/編碼/解碼)和擴充字位簇(所有語義操作)。

- Using code points for iterating over a string is wrong. They are not the basic unit of writing. One grapheme could consist of multiple code points.

- 以碼位為單位來迭代字串是錯誤的。它們不是書寫的基本單位。一個字位可能由多個碼位組成。

- To detect grapheme boundaries, you need Unicode tables.

- 要檢測字位的邊界,你需要轉換表。

- Use a Unicode library for everything Unicode, even boring stuff like

strlen,indexOfandsubstring. - 對於所有 Unicode 統一碼相關的東西,哪怕是像

strlen、indexOf和substring這樣的無聊的東西,都要使用 Unicode 統一碼庫。 - Unicode updates every year, and rules sometimes change.

- Unicode 統一碼每年更新一次,規則有時會改變。

- Unicode strings need to be normalized before they can be compared.

- Unicode 統一碼字串在比較之前需要進行歸一化。

- Unicode depends on locale for some operations and for rendering.

- 某些操作和渲染效果會因區域設定的不同而受 Unicode 統一碼影響。

- All this is important even for pure English text.

- 即使是純英文文字,這些都很重要。

Overall, yes, Unicode is not perfect, but the fact that

總的來說,是的,Unicode 統一碼不完美,但

- an encoding exists that covers all possible languages at once,

- 有一個能覆蓋所有可能語言的編碼、

- the entire world agrees to use it,

- 全世界都同意使用它、

- we can completely forget about encodings and conversions and all that stuff

- 我們可以完全忘記編碼和轉換之類的東西

is a miracle. Send this to your fellow programmers so they can learn about it, too.

的事實是一個奇蹟。把這篇文章傳送給你的程式設計師群友們,讓他們也能瞭解它。

There’s such a thing as plain text, and it’s encoded with UTF-8.

的確有這樣一種東西叫做純文字,

並且它使用 UTF-8 進行編碼。

Thanks Lev Walkin and my patrons for reading early drafts of this article.

感謝 Lev Walkin 和我的贊助者們閱讀了本文的早期草稿。

Translator's note

譯者注

---

-

這篇 2003 年的文章的中文翻譯:每一個軟體開發者都必須瞭解的關於 Unicode 統一碼和字符集的基本知識(沒有任何藉口!)。

-

字位又稱形素、字素,是最小的有意義書寫符號單位;此術語是由語音學裡的「音位(音素)」類推到文字學的。

評論